AI-driven mental health tools are becoming part of modern workplace mental health strategies. Employers hope these tools will offer accessible support, reduce burnout, and give employees more private ways to process stress. The appeal is clear. AI offers scalable and on-demand support that traditional clinical care or EAP systems cannot match.

The promise is meaningful.

- Around-the-clock support

- Self-guided emotional reflection

- Early intervention before burnout turns into crisis

- Privacy for people who do not want to disclose mental health struggles directly to HR, managers, or colleagues

But there are serious risks that cannot be ignored. These tools collect emotional and psychological data that is highly personal. In workplace settings, that creates concerns about surveillance, profiling, and unintended monitoring. If trust erodes, employees disengage silently. Research on privacy and trust in HR technology highlights these challenges for adoption and compliance. Analysts are also raising alarms about emotional data in workplace tools and its legal and reputational implications.

Privacy and ethical design are not only compliance issues. They are psychological safety and DEI issues. They shape whether employees feel supported or observed. HR, legal, compliance, and ethics teams need clarity on how these tools work, what data they collect, and how to protect employees while offering meaningful support.

Understanding the Unique Privacy Challenges of Mental Health AI

Mental health support tools process emotional, introspective, and behavior-related signals. This category of data is different from typical HR tech data like attendance, payroll, or learning completion. Emotional expression is fluid and context heavy.

The distinction matters!

Clinical tools used in therapy follow strict confidentiality and legal frameworks. Workplace wellness tools vary widely in their privacy models. The risk increases when systems infer mental states rather than collect them directly.

Key concerns include:

- Inference and profiling

- Emotional or behavioral categorization

- Unintended secondary use of emotional data

- Cross-context leakage (well-being to performance)

- Exposure to third-party vendors or data brokers

People in anonymous mental health communities often express worry about these boundaries. Users discuss whether AI therapy tools can protect confidentiality and whether emotional data could be used in unintended ways. Others raise broader ethical concerns about AI mental health tools and confidentiality standards.

Sensitive emotional data should never be treated as generic engagement data. It deserves protections closer to healthcare ethics, even when the tool is not clinical.

Legal and Compliance Landscape: Sensitive Data, Consent, and Governance

Mental health data is categorized as sensitive or special-category personal data under several global data protection frameworks. GDPR recognizes mental health as a special protected category, requiring a higher threshold for lawful processing. Even if workplace tools do not diagnose, emotional and introspective signals can still fall within sensitive categories depending on context and use.

Workplace settings add complexity. Employees are not in a neutral position when giving consent. They may fear that opting out could signal poor performance or lack of engagement. For legal and compliance teams, this raises questions around coercion, fairness, and voluntariness.

Core regulatory expectations include:

Lawful Basis for Processing

Employers must establish a valid and explicit purpose. Mental health support cannot be bundled with employment obligations.

Explicit Consent in Workplace Settings

Consent must be freely given, informed, and specific. Bundled consent fails legal tests.

Data Minimisation and Retention

Sensitive emotional data should be limited to what is necessary and stored only as long as needed.

Cross-Border Transfers

Vendors must comply with transfer mechanisms when data moves across jurisdictions.

DPIAs (Data Protection Impact Assessments)

Mental health AI often triggers DPIA requirements due to sensitivity, scale, and risk.

Legal frameworks are moving toward stricter rules on well-being data, especially when employers introduce analytics, sentiment insights, or behavioral inferences.

Trust and Transparency: Beyond Legal Compliance

Privacy compliance is only the baseline. Ethical adoption demands more. Employees must understand what a mental health tool does, who can see their data, and how insights are used. Meaningful consent must be separate from employment terms. That means no hidden data flows, no dark patterns, and no forced participation.

Credible transparency should address:

- What data is collected

- How it is processed

- What is inferred

- Whether humans can access it

- Whether it impacts HR decisions

- Whether data is shared with third parties

- Whether the tool is monitored, audited, and validated

Trust frameworks for HR technology emphasize this clarity as a condition for adoption and psychological safety. European Commission ethics guidelines similarly argue for explainability and user agency in AI.

The most sensitive issue is workplace power dynamics. Even with high transparency, employees may not believe employers will honor boundaries unless policies explicitly prohibit surveillance or performance use.

The Risk of Re-Identification in “Anonymous” Mental Health Data

Many workplace wellness programs claim to use anonymised or aggregated data. While this reduces exposure, it does not eliminate all risk. Mental health signals are inherently contextual. Emotional patterns can be linked back to individuals when combined with auxiliary datasets such as scheduling metadata, communication patterns, or performance data.

De-identification research shows that mental health data is one of the easiest categories to re-identify when cross-referenced with external behavioural or demographic variables. Even data that meets technical anonymisation standards under healthcare frameworks like HIPAA can still face re-identification risk when applied in workplace settings.

This creates an ethical dilemma for employers and HR teams. Emotional data that feels private to employees may become informationally rich for enterprise vendors. Without explicit boundaries in place, the gap between perception and reality can erode trust. Employees often assume that anything involving emotional or psychological reflection should remain confidential, not part of performance analytics or enterprise reporting.

Mental health data should be treated with the same caution as clinical data even when tools are not used in clinical contexts. Safety and ethics require conservative assumptions, not optimistic ones.

Monitoring, Auditability, and Third-Party Risk Management

When employers introduce AI mental health tools, they introduce vendor risk. This is no longer just IT procurement; it touches privacy, ethics, and legal compliance. AI governance frameworks note the need for transparency, oversight, and continuous monitoring.

Workplace mental health tools also raise questions about duty of care and unintended harm. Unlike typical HR technologies, they influence behaviour, emotion, and self-perception.

Best practice for governance includes:

Independent Algorithmic Audits

Audits should assess fairness, bias, harm, and safety, not just accuracy.

Supplier Risk and SLAs

Contracts must articulate privacy guarantees, data-use limits, and non-surveillance commitments.

Comprehensive DPIAs

Assessing legal, psychological, and contextual risks before deployment.

Ongoing Impact Assessments

Mental health AI systems can drift over time and must be re-evaluated periodically.

Clear Escalation Paths

Tools should not be treated as crisis intervention systems without clinical and ethical safeguards.

OECD’s AI principles highlight the need for transparency, accountability, and human agency in employee-facing AI. Ethical deployment requires collaboration among HR, privacy, security, compliance, and DEI teams rather than isolated decision-making.

Why Ethical Guardrails Are Essential for Mental Health AI

AI for mental health support occupies a sensitive space. It is not clinical therapy, nor is it simple wellness software. It sits between psychology, self-help, and workplace culture. Without guardrails, tools can drift toward surveillance or optimization, both of which risk employee harm.

Key ethical concerns include:

- Autonomy and agency

- Fairness and cultural context

- Consent and voluntariness

- Non-maleficence (avoiding harm)

- Purpose limitation

- Power asymmetry in employer-employee relationships

AI ethics organisations emphasize that fairness is not the only standard. In fact, trust and dignity matter just as much. This becomes even more important in mental health contexts, where stigma and vulnerability intersect. Employees need reassurance that support tools are not a channel for categorisation, monitoring, or indirect performance scoring.

A privacy-first model treats emotional wellbeing as belonging to the employee, not the enterprise. Ethical AI gives employees control over their own internal world rather than extracting it for organisational insight.

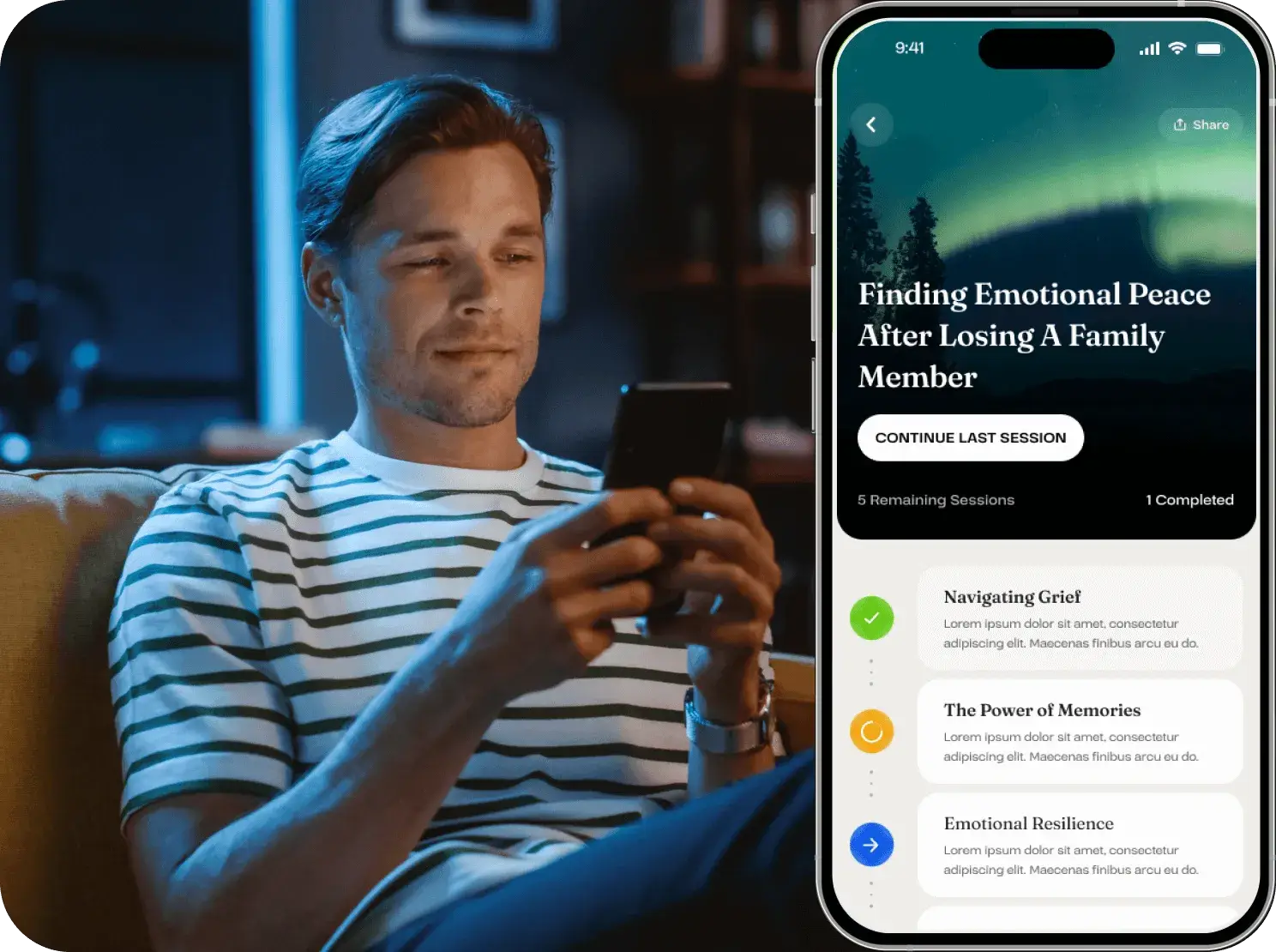

How Yuna Approaches Ethical and Privacy-Aligned Mental Health AI

Bias, privacy, surveillance, and trust are not secondary issues in mental health AI. They determine whether employees feel safe enough to engage. Workplace mental health support fails when people fear being observed, judged, or profiled.

Tools in this space must be designed with privacy and dignity from the beginning, not retrofitted under pressure. That means:

- Minimal data collection

- No behavioural scoring

- No hidden inference models

- No HR visibility into employee reflections

- No surveillance dynamics

- No diagnostic claims

- No performance impact

Yuna’s design philosophy follows these principles. It provides private, self-guided emotional support for everyday stress, burnout, rumination, self-doubt, and workload anxiety. It is built to empower individuals, not diagnose them or categorise them. It does not provide data to employers, does not create employee profiles, and does not use emotional signals for optimization or control.

For HR, legal, and DEI leaders, this matters. Support tools must be fair, equitable, culturally aware, and privacy-protective. They must expand access without increasing risk. A trust-preserving approach aligns with GDPR expectations, DPIA frameworks, and emerging ethics standards around AI and wellbeing.

Workplace mental health will increasingly depend on digital tools. The winners will be systems that respect humanity as much as they support productivity.