Mental health demand is rising faster than systems can respond. 1 in 5 adults in the USA experiences anxiety or depression each year. Yet access to care remains constrained by therapist shortages, long waitlists, and rising costs. For HR leaders and practitioners, inaction now carries measurable risks.

Digital mental health tools are already filling critical gaps. However, not all AI chatbots are designed for therapeutic support. Specialised mental health chatbots like Yuna are built to complement therapy, not replace it. They provide continuous, stigma-free support while respecting clinical boundaries.

This blog argues for a deliberate, balanced integration. One that uses specialised AI companions to strengthen therapy outcomes responsibly. The message is clear. Organizations must act with intention, or risk leaving mental health outcomes to chance.

The Role of Specialised Chatbots in Enhancing Therapy Outcomes

Specialised chatbots are not experimental tools. They already influence how people regulate emotions, reflect, and stay engaged between therapy sessions. The real question is whether organizations guide this usage responsibly or ignore it entirely.

Improving Accessibility and Continuity of Care

Specialised chatbots offer immediate emotional support between therapy sessions, reducing gaps where motivation often collapses. These gaps frequently lead to disengagement, missed appointments, or emotional relapse.

For individuals facing geographic barriers, cost constraints, or stigma, specialised chatbots provide a safe and structured first step. AI support also helps remote workers maintain their mental health consistently.

Many users report feeling safer opening up to AI-based companions during emotionally vulnerable moments. When designed responsibly, AI therapy helps normalize mental health conversations instead of replacing human care.

For HR leaders, this continuity reduces attrition from wellness programs. For therapists, it preserves momentum between sessions. The action step is clear. Digital mental health support must be intentional, not accidental.

Reinforcing Psychoeducation and Coping Skills

Therapy is not limited to conversations. It involves learning, repetition, and daily application. Specialised chatbots reinforce therapy concepts when real-life stress appears. They prompt breathing exercises, cognitive reframing, and grounding techniques at the moment of need.

Mental health chatbots help reduce anxiety and depression symptoms in mild to moderate cases when aligned with professional care. This evidence matters for decision-makers.

Chatbots should not replace therapeutic homework. They should become part of it. When integrated correctly, specialised AI extends therapy into everyday life, where real stress actually occurs.

Enhancing Self-Monitoring and Emotional Awareness

Awareness precedes improvement. Specialised chatbots help users track moods, behaviors, and emotional triggers consistently. Tools like PHQ-9 and GAD-7 become part of daily reflection, not occasional assessment.

Research from the National Institutes of Health shows digital self-monitoring improves emotional insight and early help-seeking. Users notice patterns earlier and respond sooner.

Therapists can review AI-generated summaries to guide sessions more efficiently. HR teams can encourage reflective habits without intrusive monitoring. The strategic opportunity lies in converting awareness into timely action.

Why HR Leaders Must Act Now, Not Later

Mental health behaviors are already changing, with or without organizational guidance. Employees are using AI tools independently, often without oversight. When HR leaders delay action, they lose influence over safety, alignment, and outcomes.

Ignoring specialised chatbot usage does not prevent adoption. It simply shifts responsibility away from leadership. Employees adopt digital mental health tools faster than workplace policies evolve. This gap increases risk, inconsistency, and confusion across teams.

Proactive leadership allows organizations to set guardrails early. It ensures AI reinforces professional care instead of replacing it. Acting now protects employees and reduces long-term reputational risk.

The Cost of Doing Nothing

Disengagement often begins quietly between therapy sessions. Small emotional setbacks compound when support feels distant or unavailable. Over time, this leads to missed sessions, stalled progress, and eventual burnout.

Organizations pay for this delay through absenteeism, turnover, and reduced performance. Digital tools already shape employee coping behaviors daily. When leaders fail to guide usage, outcomes become unpredictable.

Responsible integration costs less than crisis recovery. Early mental health diagnosis reduces escalation and dependency on reactive interventions. For HR leaders, inaction is no longer neutral. It is a strategic liability.

From Passive Tools to Active Support Systems

Most organizations treat chatbots as optional wellness add-ons. This limits impact and weakens accountability. Specialised chatbots work best when positioned as active extensions of care.

This means setting usage guidelines, aligning with therapists, and educating employees clearly. It also means choosing platforms designed for collaboration, not isolation. Thoughtful AI and HR collaboration helps organizations build emotionally resilient teams.

When AI companions become structured supports, not silent tools, engagement improves. Users feel supported, not abandoned. Therapists gain continuity. HR gains insight without surveillance.

This shift transforms supplementation into strategy.

Accepting Limitations: What Chatbots Can’t Replace

Responsible leadership requires honesty about boundaries. Overpromising creates risk, while under-regulating creates harm.

Lack of Genuine Empathy and Human Nuance

AI-powered mental health apps can simulate empathy but cannot feel emotional context. Healing relies on relational depth, trust, and human presence. Research consistently shows that therapeutic alliance remains irreplaceable.

This limitation does not invalidate AI. It defines its role. Specialised chatbots should support reflection, not replace human connection.

Inadequate for Crisis or Complex Conditions

Even specialised chatbots are not crisis tools. They may fail to interpret severe distress without human oversight.

Organizations must implement escalation pathways and human involvement. Ignoring this responsibility invites ethical and legal consequences.

Ethical and Privacy Considerations

Trust determines engagement in the mental health market. As mental health data is deeply personal, some platforms store conversations on third-party servers or reuse data for training.

However, HR leaders can and must demand encryption, consent clarity, and regulatory compliance. Ethical shortcuts damage credibility, trust, and long-term adoption.

Best Practices for a Balanced Integration

Knowing the risks is not enough. Leaders must operationalize safeguards deliberately. Mental health apps must follow a balanced approach to make sure users are getting the best possible care without sacrificing privacy or trust.

Open Communication Between Therapists and Users

Clients should discuss AI companion use openly with therapists. Alignment ensures tools reinforce treatment rather than disrupt it. Therapists can interpret insights meaningfully. Silence creates fragmentation. Dialogue creates cohesion.

Setting Realistic Expectations

Specialised chatbots should be framed as mental health coaching companions, not clinicians. Clear messaging prevents dependency and misuse. Professional intervention remains essential for trauma, diagnosis, and crisis care.

Choosing Clinically Validated Tools

Decision-makers must prioritize evidence-backed platforms. Peer-reviewed validation and transparent policies are essential. Choosing specialised, clinically informed tools protects both users and organizations.

Yuna’s Role in Supporting a Balanced Therapeutic Ecosystem

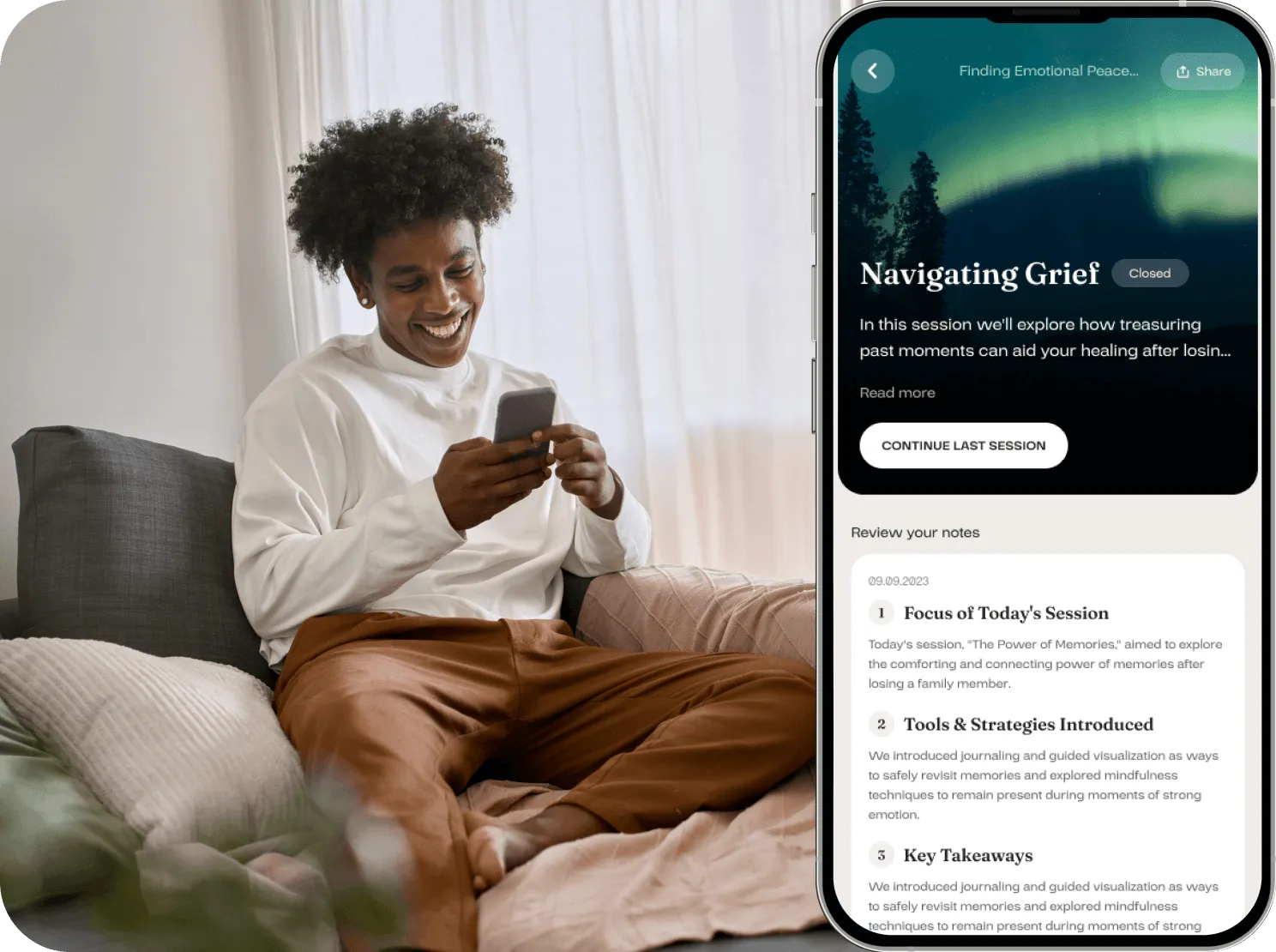

Action matters most at the point of implementation. Yuna exists to turn responsible intent into daily practice. It is not a generic chatbot. Yuna Health is a specialised mental health companion designed to supplement therapy safely and ethically.

Yuna supports users between sessions through reflective check-ins, emotional awareness, and personalized guidance. Its design is grounded in clinically informed psychological frameworks and refined continuously with expert input. When complex emotional signals emerge, Yuna enables escalation rather than silence. This human-in-the-loop approach protects users while maintaining accessibility.

For HR leaders, Yuna integrates into wellness ecosystems without compromising privacy. Aggregated insights support workforce well-being decisions responsibly. Its architecture aligns with GDPR and ethical AI principles, strengthening trust and making it a reliable EAP.

Most importantly, Yuna keeps people engaged between moments of professional support. It bridges gaps where disengagement often begins. By aligning digital empathy with human expertise, Yuna transforms supplementation into strategy.

FAQs

1. Can artificial intelligence enhance the effectiveness of mental health therapy?

AI can enhance therapy when used as a supportive layer, not a replacement. Specialised AI tools help maintain emotional continuity between sessions, reinforce coping skills, and encourage self-reflection. Platforms like Yuna act as mental health coaches, supporting awareness and engagement while leaving diagnosis and treatment decisions to qualified professionals.

2. Are generic AI chatbots safe to use for mental health support?

Generic AI chatbots can offer basic emotional support, but they have clear limitations. They lack clinical alignment, escalation pathways, and deep emotional context. For safe and effective use, individuals and organizations should choose specialised platforms like Yuna, designed to supplement therapy responsibly with ethical guardrails and human oversight.

3. Is AI likely to replace human counselling or therapy in the future?

AI is unlikely to replace counselling or therapy, especially where empathy, trust, and clinical judgment are required. Instead, AI will complement human care by improving access and continuity. Yuna follows this model by supporting users between sessions while ensuring human professionals remain central to mental health treatment.

4. What does an AI chatbot for therapy actually do?

An AI chatbot for therapy supports emotional reflection, stress awareness, and coping practice through guided conversations. Specialised tools like Yuna go further by using clinically informed frameworks and personalised insights. They help users stay engaged and self-aware between therapy sessions without attempting diagnosis or clinical treatment.

5. Is it appropriate to use AI tools to support personal mental well-being?

Yes, AI tools can support mental well-being when used responsibly. They are helpful for reflection, emotional check-ins, and stress management. Yuna is designed for this purpose, offering private, judgment-free coaching while respecting boundaries and encouraging professional care when deeper support is needed.